Robots.txt is a text file that allows a website to provide instructions to web-crawling bots.

It tells search engines like Google which parts of your website they can and cannot access when indexing your site.

That makes robots.txt a powerful tool for SEO and can also be used to ensure that certain pages do not appear in Google search results.

How Does Robots.txt Work?

Robots.txt is a text file that you can create to tell search engine bots which pages to crawl and index on your website. It is normally stored in the root directory of your website.

Search engines like Google use web crawlers, sometimes called web robots, to archive and categorize websites. Most bots are configured to search for a robots.txt file on the server before they read any other file from the website. A bot does this to see if a website’s owner has special instructions on crawling and indexing their site.

The robots.txt file contains a set of instructions that request the bot to ignore specific files or directories. This may be for privacy or because the website owner believes that the contents of those files and directories are irrelevant to the website’s categorization in search engines.

Here is an example of a robots.txt file:

User-Agent: *

Allow: /wp-content/uploads/

Disallow: /wp-content/plugins/

Disallow: /wp-admin/

Sitemap: https://example.com/sitemap_index.xml

In this example, the asterisk ‘*’ after ‘User-Agent’ specifies that the instructions are for all search engines.

Next, we allow search engines to crawl and index files in our WordPress uploads folder. Then, we disallow them from crawling and indexing plugins and WordPress admin folders.

Note that if you don’t disallow a URL, then search engine bots will assume they can index it.

Finally, we have provided the URL of our XML sitemap.

How to Create a Robots.txt File in WordPress

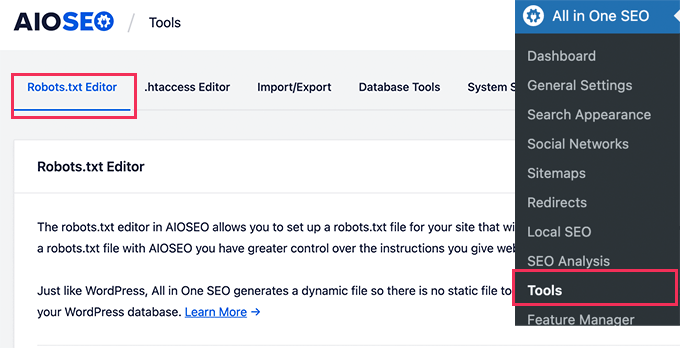

The easiest way to create a robots.txt file is using All in One SEO. It’s the best WordPress SEO plugin on the market and comes with an easy-to-use robots.txt file generator.

Another tool you can use is WPCode, a powerful code snippets plugin that lets you add custom code to your website easily and safely. The Pro version includes a handy feature that lets you quickly edit the robots.txt file.

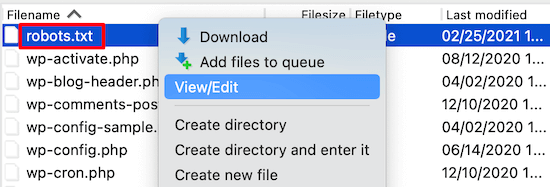

However, if you are familiar with code, then you can create the robots.txt file manually. You will need to use an FTP client to edit the robots.txt file. Alternatively, you can use the file manager provided by your WordPress hosting.

For more details on creating a robots.txt file, see our guide on how to optimize your WordPress robots.txt for SEO.

How to Use Robots.txt to Stop Search Engines Crawling a Site

Search engines are the biggest source of traffic for most websites. However, there are a few reasons why you might want to discourage search engines from indexing your site.

For example, if you are still building your website, then you won’t want it to appear in search results. The same is true of private blogs and business intranets.

You can use disallow rules in your robots.txt file to ask search engines not to index your entire website or just certain pages. You’ll find detailed instructions in our guide on how to stop search engines from crawling a WordPress site.

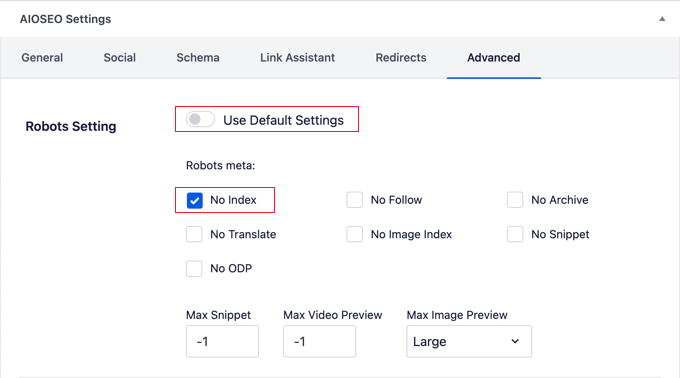

You can use tools like All in One SEO to automatically add these rules to your robots.txt file.

It is important to note that not all bots will honor a robots.txt file. Some malicious bots will even read the robots.txt file to find which files and directories they should target first.

Also, even if a robots.txt file instructs bots to ignore specific pages on the site, those pages may still appear in search results if they are linked to other crawled pages.

We hope this article helped you learn more about robots.txt in WordPress. You may also want to see our Additional Reading list below for related articles on useful WordPress tips, tricks, and ideas.

If you liked this article, then please subscribe to our YouTube Channel for WordPress video tutorials. You can also find us on Twitter and Facebook.